A Gaussian approximation potential (GAP) for the Zundel cation

The present notebook is meant to give you an overview of the main ingredients that you need to build an interatomic potential with librascal and use it in connection with i-Pi (https://github.com/lab-cosmo/i-pi) to generate molecular dynamics (MD) trajectories of the system of interest. We will start from building a GAP model for Zundel cations (\(H_5O_2+\)), using a training set obtained via Bowman PES sampling, calculate its RMSE on a test set to check its performance and run a short

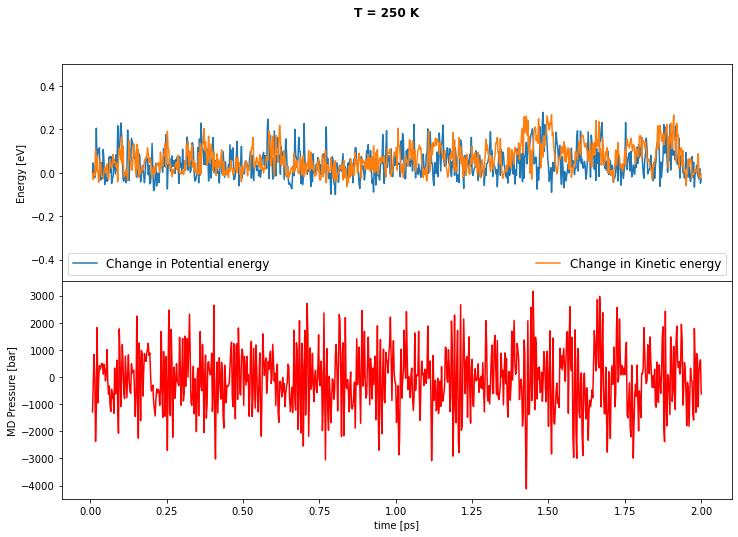

NVT simulation at \(\text{T} = 250\,\text{K}\).

The mathematical framework that we are going to use is the kernel-GAP fitting method, using both total energies and atomic forces as target properties. Basically the GAP-model total energy of a zundel molecule is computed using the following expression:

where \(E_0\) represents an energy baseline (given usually by the sum of atomic self-contributions), \(\bf{d_i}\) (\(i = 1, \dots, N_{atoms}\)) are the set of (normalized SOAP) descriptors describing an environment centred around atom \(i\), \(\bf{d_s}\) the set of descriptors corresponding to the environments of the sparse set (of size \(M\)) and K a kernel matrix that describes the similarity of two different atomic environments. In our application, the kernel is just the dot product, raised to some integer power \(\zeta\):

Finally \(\alpha_s\) represents the weights of each sparse environment, to be determined using Kernel-Ridge Regression (KRR). For extensive details on the SOAP GAP-model fitting procedure and interesting physical applications, we invite the reader to refer to the book chapter [1]. Details on the implementation in librascal are instead given in the companion publication [2].

[1]: M. Ceriotti, M.J. Willatt, and G. Csányi, in Handbook of Materials Modeling (Springer International Publishing, Cham, 2018), pp. 1–27. https://doi.org/10.1007/978-3-319-42913-7_68-1

[2]: F. Musil, M. Veit, A. Goscinski, G. Fraux, M.J. Willatt, M. Stricker, T. Junge, and M. Ceriotti, J. Chem. Phys. 154, 114109 (2021). https://doi.org/10.1063/5.0044689

Getting started

In order to be able to fit a potential with librascal (the model evaluator) and interface it with i-Pi (the MD engine) we first need to have both softwares available and correctly installed. For librascal, the following should suffice:

$ pip install git+https://github.com/lab-cosmo/librascal.git

and the same for i-PI:

$ pip install git+https://github.com/lab-cosmo/i-pi.git

If these steps don’t work, try looking at the README.rst files of the respective repositories for further instructions.

Importing all the necessary Librascal modules

Let us start from the import of all the necessary modules and classes. In librascal, these are:

the SOAP descriptors of a structure, by means of the

SphericalInvariantsclass;the kernels between a set of environments and the sparse set (the

Kernelclass);the GAP model itself, which is saved as an object of the

KRRclass. The predict method of the same class will allow us to give predictions to new unseen structures.

NOTE: it is assumed that the input format for the training set and the test set is an ASE-compatible (e.g. extended-XYZ) file, which is then converted into an array of ASE Atoms objects, each one corresponding to a specific structure. librascal uses these frames to compute the representations and predict the properties of new structures. In this example on zundel cations, the target energies to build the model with are reported in the zundel_energies.txt file, while the atomic forces are

additional columns of the xyz.

Alternatively, global target properties can be reported in the header line following the ASE format.

[1]:

%matplotlib inline

from matplotlib import pylab as plt

import ase

from ase.io import read, write

from ase.build import make_supercell

from ase.visualize import view

import numpy as np

# If installed -- not essential, though

try:

from tqdm.notebook import tqdm

except ImportError:

tqdm = (lambda i, **kwargs: i)

from time import time

from rascal.models import Kernel, train_gap_model, compute_KNM

from rascal.representations import SphericalInvariants

from rascal.utils import from_dict, to_dict, CURFilter, dump_obj, load_obj

[2]:

cd i-PI/zundel/

/ssd/local/code/librascal-zundel/examples/i-PI/zundel

In the snippets below we extract the relevant properties for each ASE frame. We load the total potential energies and we use the ASE.Atoms.arrays methods to get the atomic forces. For more information on how to use ASE-related methods, check the ASE Atoms documentation.

[3]:

# Load the first N structures of the zundel dataset

N_dataset = 1000

frames = read('zundel_dataset.xyz', index=':{}'.format(N_dataset))

energies = np.loadtxt('zundel_energies.txt')[:N_dataset]

#Keys of the arrays dictionary

print(frames[0].arrays.keys())

dict_keys(['numbers', 'positions', 'forces'])

[4]:

def extract_forces(frames,array_key='zeros'):

f = []

for frame in frames:

if array_key is None:

pass

elif array_key == 'zeros':

f.append(np.zeros(frame.get_positions().shape))

else:

f.append(frame.get_array(array_key))

try:

f = np.concatenate(f)

except:

pass

return f

Build the GAP model

The preliminary step towards building a GAP model is defining a training and a test set. The training set is built by random selection of \(800\) of the available structures, while the remaining \(200\) will represent the test set. At this point, we also extract the distinct atomic species present in our system, define the atomic baseline energy and extract the information regarding energies and forces in the train and test set structures, which we will need later on.

[5]:

# Number of structures to train the model with

n = 800

global_species = set()

for frame in frames:

global_species.update(frame.get_atomic_numbers())

global_species = np.array(list(global_species))

# Select randomly n structures for training the model

ids = list(range(N_dataset))

np.random.seed(10)

np.random.shuffle(ids)

train_ids = ids[:n]

frames_train = [frames[ii] for ii in ids[:n]]

y_train = [energies[ii] for ii in ids[:n]]

y_train = np.array(y_train)

[6]:

f_train = extract_forces(frames_train, 'forces')

f = extract_forces(frames, 'forces')

[7]:

# Atomic energy baseline

atom_energy_baseline = np.mean(energies)/(frames[0].get_global_number_of_atoms())

energy_baseline = {int(species): atom_energy_baseline for species in global_species}

Now we proceed with the actual calculation of the SOAP vectors of our training set. We need to specify an hyperparameters dictionary, which librascal uses to compute the structural features. The meaning of each hyperparameter and how to correctly set them is reported in the ``SphericalInvariants` documentation <https://lab-cosmo.github.io/librascal/reference/python.html#rascal.representations.SphericalInvariants>`__. These hyperparameters can be used as default values, but a careful

optimization of the interaction cutoff might be required in the case the material under investigation might present some mid- or long-range order.

For the actual calculation of the SOAP features, we first create an object of the SphericalInvariants class, defined by its hyperparameters. The methods that we then need to use are transform()}, which yields a second object called the manager containing the representation, while get_features() converts it into an \(NxM\) matrix, \(N\) being the number of atomic environments in the training set and M the number of features per each environment.

At this point, the compute_gradients hyper should be set to False. For now, we will only use the SOAP representations (no gradients!) of the training set structures to select a sparse set using the CUR decomposition. Setting it to True would require Librascal to compute all the gradients of the SOAP representation w.r.t. atomic coordinates, thus making the structural managers unnecessarily memory expensive. We will instead compute later on the gradients of the sparse kernels using

the compute_KNM method when we fit the GAP model.

[8]:

# define the parameters of the spherical expansion

hypers = dict(soap_type="PowerSpectrum",

interaction_cutoff=3.0,

max_radial=6,

max_angular=4,

gaussian_sigma_constant=0.5,

gaussian_sigma_type="Constant",

cutoff_function_type="RadialScaling",

cutoff_smooth_width=0.5,

cutoff_function_parameters=

dict(

rate=1,

scale=3.5,

exponent=4

),

radial_basis="GTO",

normalize=True,

optimization=

dict(

Spline=dict(

accuracy=1.0e-05

)

),

compute_gradients=False

)

soap = SphericalInvariants(**hypers)

The following snippet computes the descriptors and the managers for each frame, which will represent the expensive part of the calculation, in terms of memory usage.

NOTE: the librascal structure manager only works if the atoms have been wrapped within the cell provided in the input file using e.g. ase.Atoms.wrap(), or one of the structure preprocessors provided in rascal.neighbourlist.structure_manager module (useful especially for non-periodic structures).

[9]:

managers = []

for f in tqdm(frames_train):

f.wrap(eps=1e-18)

start = time()

managers = soap.transform(frames_train)

print ("Execution: ", time()-start, "s")

Execution: 0.12206697463989258 s

At this point, we can define the sparse set, i.e. the set of (ideally) “maximally diverse” environments that will be used as basis points of the kernel ridge regression (KRR, mostly equivalent to GAP) model. We can choose the number of sparse environments in librascal per each atomic species, by defining a dictionary containing (atomic number, number of environments) pairs. The CURFilter class then selects out the most “representative” environments according to the representation that we

provide as the input object and by applying the CUR decomposition [3].

[3]: 1 M.W. Mahoney and P. Drineas, PNAS 106, 697 (2009). https://doi.org/10.1073/pnas.0803205106

[10]:

# select the sparse points for the sparse kernel method with CUR on the whole training set

n_sparse = {1:50, 8:100}

compressor = CURFilter(soap, n_sparse, act_on='sample per species')

X_sparse = compressor.select_and_filter(managers)

The number of pseudo points selected by central atom species is: {1: 50, 8: 100}

Selecting species: 1

Reconstruction RMSE=1.463e+00

Selecting species: 8

Reconstruction RMSE=1.577e-02

As shown in eq. \((1)\), in order to fit a GAP potential we need to compute the kernels of all the training structure descriptors and the sparse set, as well as the gradients w.r.t. the atomic positions, if we wish to fit the atomic forces. We do so by using another key structure of librascal: the Kernel class. We first build a kernel object containing the representation (SOAP), which we then use to compute the sparse kernel matrix \(K_{NM}\) between the training set and the sparse

set and its gradients using the compute_KNM method.

Finally, we group everything together to build the GAP model, which basically uses the kernel matrices to regress the weights \(\{\alpha_s\}_{s=1}^M\) on each sparse environment. The lambdas are the regularization parameters of the KRR (for both energies and forces), while jitter is a small parameter that enables its numerical convergence.

The output is a KRR object, which we can save as .json file for future use. For this last bit we use the dump_obj method (part of the rascal.utils.io module).

[11]:

zeta = 2

start = time()

hypers['compute_gradients'] = True

soap = SphericalInvariants(**hypers)

kernel = Kernel(soap, name='GAP', zeta=zeta, target_type='Structure', kernel_type='Sparse')

KNM = compute_KNM(tqdm(frames_train, leave=True, desc="Computing kernel matrix"), X_sparse, kernel, soap)

model = train_gap_model(kernel, frames_train, KNM, X_sparse, y_train, energy_baseline,

grad_train=-f_train, lambdas=[1e-12, 1e-12], jitter=1e-13)

# save the model to a file in json format for future use

dump_obj('zundel_model.json', model)

np.savetxt('Structure_indices.txt', ids)

print ("Execution: ", time()-start, "s")

Execution: 1.5349509716033936 s

Assessment of the model

To perform a basic assessment of the model we have fitted, we need to predict the properties (energies and forces) of a test set. In the calculation of the model that we have fitted previously, we have randomly splitted the original dataset in a training set of 800 structures and a test set of another 200. So first we load the model and the indices corresponding to the shuffled dataset: the first 800 of those will correspond to the training set, the last 200 to the test set.

[12]:

n = 800

model = load_obj('zundel_model.json')

ids = np.loadtxt('Structure_indices.txt')

[13]:

train_ids = [int(i) for i in ids[:n]]

test_ids = [int(i) for i in ids[n:]]

frames_train = [frames[ii] for ii in train_ids]

frames_test = [frames[ii] for ii in test_ids]

y_train = [energies[ii] for ii in train_ids]

y_train = np.array(y_train)

y_test = [energies[ii] for ii in test_ids]

y_test = np.array(y_test)

Let us now compute the predictions on the test set, using the predict() and predict_forces() methods of the KRR class. At this point we need to compute the SOAP representation of the test set structures. It is important to stress however that this will not cause any issue regarding memory usage, because all we need is the predictions, so we can compute the managers of the test set structures one by one and calculate the predictions right away. Instead for the GAP fitting we need to

store ALL the structure managers of the training set to perform the regression (which causes a large RAM usage).

[14]:

# make predictions on the test set

y_pred = []

f_pred = []

for f in tqdm(frames_test):

positions = f.get_positions()

f.set_positions(positions+[1,1,1])

f.wrap(eps=1e-18)

m = soap.transform(f)

y_pred.append(model.predict(m))

f_pred.append(model.predict_forces(m))

y_pred = np.array(y_pred).flatten()

f_pred = np.array(f_pred)

[15]:

f_test = extract_forces(frames_test, 'forces')

Let us now compute the overall performance of the model. This is done by calculation of the Root-Mean-Square Error (RMSE), i.e. the standard deviation of the residuals according to the standard formula:

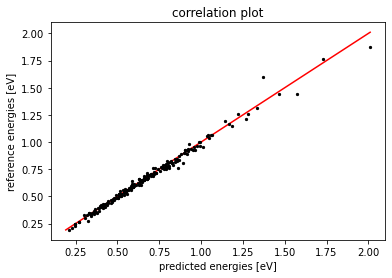

which we can compare to the standard deviation of the test set itself to quantify how much the model captures the energy variations in the test set. The \(\% \text{RMSE}\) of our model is about \(5 \%\) of the training set STD, which is sufficiently accurate to run MD safely.

Finally we plot a “correlation plot”, to observe how well the predictions on the test set correlate with the reference DFT-computed energies.

NOTE: by just repeating this procedure and fitting potentials with increasing training set size, one can also construct the learning curve of the GAP.

[16]:

from rascal.utils import get_score

score = get_score(y_pred, y_test)

RMSE = score['RMSE']

sigma_test = np.std(y_test)

print("RMSE = ", RMSE*1000.0, "meV")

print("Sigma test set = ", sigma_test, " eV")

print("%RMSE = ", RMSE/sigma_test*100.0, " %")

RMSE = 30.087996494496597 meV

Sigma test set = 0.274800153468654 eV

%RMSE = 10.949046466936812 %

[17]:

plt.scatter(y_test, y_pred, s=5.0, c = 'black')

lims = [

np.min([np.min(y_pred), np.min(y_test)]), # min of both axes

np.max([np.max(y_pred), np.max(y_test)]), # max of both axes

]

# now plot both limits against eachother

plt.plot(lims, lims, 'k-', zorder=0, color='red')

plt.title("correlation plot")

plt.xlabel("predicted energies [eV]")

plt.ylabel("reference energies [eV]")

[17]:

Text(0, 0.5, 'reference energies [eV]')

MD simulations

Now we are going to use the fitted model to perform a simple NVT simulation at \(\text{T} = 250\,\)K using the i-Pi interface with librascal. For that we will use a communication socket run by i-Pi, which basically outputs a structure produced by the MD and gives it in input to Librascal. This will in turn return energies, forces and stresses by means of the GenericMDCalculator class of Librascal.

The job itself will generate a parent process (i-Pi) which contains information of the ensemble, the step needed for the time-integration, the thermostat characteristics, and all other trajectory-related infos. All these information are initially stored in an input.xml file as specified in the i-Pi documentation at https://github.com/lab-cosmo/i-pi and given as inputs to i-Pi. The Librascal calculator is then launched as a child process and exchanges information with the MD driver.

The Librascal driver in i-Pi needs some input parameters, that can be given directly in the command line when the driver is called. To check the needed information, just use the –help option when calling it, as shown below.

NOTE: in what follows, it is assumed that your i-Pi and Librascal folders lie in a common directory. Check and modify the path below when defining IPI if this is not the case.

Check the XML file in the i-PI/zundel folder to quickly read the relevant information about the MD settings. Importantly, the <properties> tag gives you information about the physical properties that are printed out by i-PI. This simulation gives as output a .out file containing the time-evolution of all the relevant physical quantities, while the .xc.xyz file contains the full trajectory, which you can later visualize with VMD. The file h5o2+.xyz is both used by i-PI as a starting

configuration of the trajectory and by Librascal as a template to load information about chemical species and number of atoms per species of the system.

As the simulation evolves you can plot some interesting physical properties, for instance the MD kinetic energy, the total potential energy and the pressure, and check for thermalization.

An example script is provided to launch a MD simulation with i-PI and the model that has just been fitted zundel_model.json (note that you may need to edit some variables in the script, or set $PATH and $PYTHONPATH appropriately, in order to make it work on your system):

bash ./run.sh

and the simulation can be monitored with the following cell.

[18]:

#Plotting the results of the simulations

try:

f = open('zundel.out', 'r')

except FileNotFoundError:

f = open('zundel.example.out', 'r')

lines=f.readlines()[13:]

Nframes = len(lines)

steps = np.zeros((Nframes, 1), dtype=float)

time = np.zeros((Nframes, 1), dtype=float)

KE = np.zeros((Nframes, 1), dtype=float)

PE = np.zeros((Nframes, 1), dtype=float)

Pressure = np.zeros((Nframes, 1), dtype=float)

i = 0

for x in lines:

steps[i] = float(x.split()[0])

time[i] = float(x.split()[1])

KE[i] = float(x.split()[4])

PE[i] = float(x.split()[5])

Pressure[i] = float(x.split()[6])

i += 1

f, axs = plt.subplots(2, 1, sharex=True, figsize=(12, 8))

f.subplots_adjust(hspace=0)

f.suptitle('T = 250 K', fontweight='bold')

axs[0].plot(time, (KE - KE[0]), linewidth = 1.5, label = 'Change in Potential energy')

axs[0].plot(time, (PE - PE[0]), linewidth = 1.5, label = 'Change in Kinetic energy')

axs[0].set(ylabel= 'Energy [eV]')

axs[0].set_ylim(-0.5, 0.5)

axs[0].legend(ncol = 2, mode='expand', prop={'size': 12}, loc="lower center")

axs[1].plot(time, Pressure, linewidth = 1.5, color = 'red')

axs[1].set(ylabel= 'MD Pressure [bar]')

axs[1].set(xlabel= 'time [ps]')

[18]:

[Text(0.5, 0, 'time [ps]')]