Getting insights about the model

In the first tutorial, we calculated invariant representations of atomic environments and used them for the prediction of energies.

But it is always good to have some understanding of the model. This tutorial will show how to get spectrums of pca along with the number of covariants after each transformation.

First of all, we need fitted model. This preliminary cell reproduces the corresponding part of the first tutorial, “constructing machine learning potential”: (few hypers are changed)

Preliminaries

# cell to wrap in collapsible in future

# downloading dataset from https://archive.materialscloud.org/record/2020.110

!wget "https://archive.materialscloud.org/record/file?file_id=b612d8e3-58af-4374-96ba-b3551ac5d2f4&filename=methane.extxyz.gz&record_id=528" -O methane.extxyz.gz

!gunzip -k methane.extxyz.gz

import numpy as np

import ase.io

import tqdm

from nice.blocks import *

from nice.utilities import *

from matplotlib import pyplot as plt

from sklearn.linear_model import BayesianRidge

HARTREE_TO_EV = 27.211386245988

train_subset = "0:10000" #input for ase.io.read command

test_subset = "10000:15000" #input to ase.io.read command

environments_for_fitting = 1000 #number of environments to fit nice transfomers

grid = [150, 200, 350, 500, 750, 1000, 1500, 2000, 3000, 5000, 7500,

10000] #for learning curve

#HYPERS for librascal spherical expansion coefficients

HYPERS = {

'interaction_cutoff': 6.3,

'max_radial': 5,

'max_angular': 5,

'gaussian_sigma_type': 'Constant',

'gaussian_sigma_constant': 0.05,

'cutoff_smooth_width': 0.3,

'radial_basis': 'GTO'

}

#our model:

def get_nice():

return StandardSequence([

StandardBlock(ThresholdExpansioner(num_expand=150),

CovariantsPurifierBoth(max_take=10),

IndividualLambdaPCAsBoth(n_components=50),

ThresholdExpansioner(num_expand=300, mode='invariants'),

InvariantsPurifier(max_take=50),

InvariantsPCA(n_components=200)),

StandardBlock(ThresholdExpansioner(num_expand=150),

CovariantsPurifierBoth(max_take=10),

IndividualLambdaPCAsBoth(n_components=50),

ThresholdExpansioner(num_expand=300, mode='invariants'),

InvariantsPurifier(max_take=50),

InvariantsPCA(n_components=200)),

StandardBlock(ThresholdExpansioner(num_expand=150),

CovariantsPurifierBoth(max_take=10),

IndividualLambdaPCAsBoth(n_components=50),

ThresholdExpansioner(num_expand=300, mode='invariants'),

InvariantsPurifier(max_take=50),

InvariantsPCA(n_components=200))

],

initial_scaler=InitialScaler(

mode='signal integral', individually=True))

train_structures = ase.io.read('methane.extxyz', index=train_subset)

test_structures = ase.io.read('methane.extxyz', index=test_subset)

all_species = get_all_species(train_structures + test_structures)

train_coefficients = get_spherical_expansion(train_structures, HYPERS,

all_species)

test_coefficients = get_spherical_expansion(test_structures, HYPERS,

all_species)

#individual nice transformers for each atomic specie in the dataset

nice = {}

for key in train_coefficients.keys():

nice[key] = get_nice()

for key in train_coefficients.keys():

nice[key].fit(train_coefficients[key][:environments_for_fitting])

As was discussed in the first tutorial, ThresholdExpansioner sorts all pairs of inputs by their pairwise importances and, after that, produces the output only for a fixed number of the most important pairs. This number is controlled by num_expand.

However, there are two reasons why the real number of covariants after ThresholdEpansioner might be different from the specified one. 1) Some pairs of input covariants do not produce features to all lambda channels. Particularly, pair of input covariants with some l1 and l2 produces covariants only to lambda channels where |l1 - l2| <= lambda <= l1 + l2. Thus, the real number of features after ThresholdExpanioner would be smaller than the specified one in num_expand.

Pairwise importances can have a lot of collisions. For instance, it is impossible to select such a threshold to filter out exactly 3 pairs from the set of pairs with the following importances [1, 1, 2, 2]. It is possible to filter out either 0, either 2, either 4, but not exactly 3.

Thus, it is a good idea to have the possibility to look at the actual amount of intermediate features.

StandardSequence has a method get_intermediat_shapes(). It returns intermediate shapes in the form of nested dictionary:

intermediate_shapes = nice[1].get_intermediate_shapes()

for key in intermediate_shapes.keys():

print(key, ':', intermediate_shapes[key], end='\n\n\n')

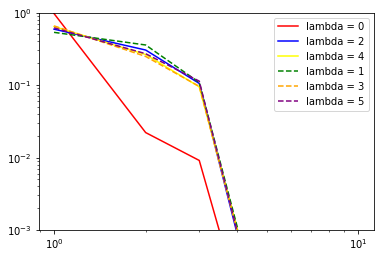

Spectrums of pcas can be accessed in the following way: (convenient getters will be inserted in the next version of NICE)

def proper_log_plot(array, *args, **kwargs):

'''avoiding log(0)'''

plt.plot(np.arange(len(array)) + 1, array, *args, **kwargs)

plt.ylim([1e-3, 1e0])

colors = ['r', 'g', 'b', 'orange', 'yellow', 'purple']

print("nu: ", 1)

for i in range(6): # loop over lambda channels

if (nice[6].initial_pca_ is not None):

if (nice[6].initial_pca_.even_pca_.pcas_[i] is not None):

proper_log_plot(

nice[6].initial_pca_.even_pca_.pcas_[i].importances_,

color=colors[i],

label="lambda = {}".format(i))

for i in range(6): # loop over lambda channels

if (nice[6].initial_pca_ is not None):

if (nice[6].initial_pca_.odd_pca_.pcas_[i] is not None):

proper_log_plot(

nice[6].initial_pca_.odd_pca_.pcas_[i].importances_,

'--',

color=colors[i],

label="lambda = {}".format(i))

plt.yscale('log')

plt.xscale('log')

plt.legend()

plt.show()

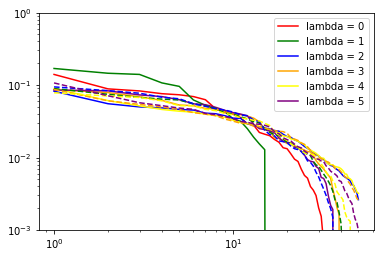

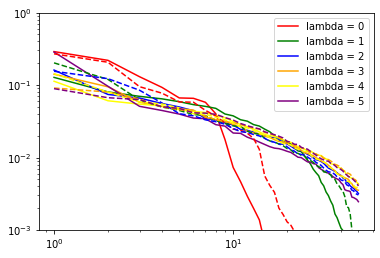

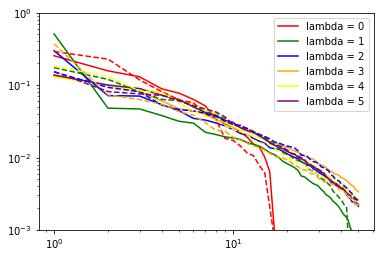

for nu in range(len(nice[6].blocks_)): # loop over body orders

print("nu: ", nu + 2)

for i in range(6): # loop over lambda channels

if (nice[6].blocks_[nu].covariants_pca_ is not None):

if (nice[6].blocks_[nu].covariants_pca_.even_pca_.pcas_[i]

is not None):

proper_log_plot(nice[6].blocks_[nu].covariants_pca_.even_pca_.

pcas_[i].importances_,

color=colors[i],

label="lambda = {}".format(i))

for i in range(6): # loop over lambda channels

if (nice[6].blocks_[nu].covariants_pca_ is not None):

if (nice[6].blocks_[nu].covariants_pca_.odd_pca_.pcas_[i]

is not None):

proper_log_plot(nice[6].blocks_[nu].covariants_pca_.odd_pca_.

pcas_[i].importances_,

'--',

color=colors[i])

plt.yscale('log')

plt.xscale('log')

plt.legend()

plt.show()

(checks if pca instance is None are needed since it would be None if the number of features for corresponding lambda channel would be zero after the expansion step)

Inner class for single Lambda channel inherits from sklearn.decomposition.TruncatedSVD (PCA without centering the data, which would break covariant transformation). Thus, in addition to .importances_, .explained_variance_ and .explained_variance_ratio_ are also accessible.

importances_ (which are used by subsequent TresholdExpansioners) are explained_variance_ normalized not to variance of input as explained_variance_ratio_, but to variance of output:

print(np.sum(nice[6].blocks_[1].\

covariants_pca_.even_pca_.pcas_[2].explained_variance_))

print(np.sum(nice[6].blocks_[1].\

covariants_pca_.even_pca_.pcas_[2].explained_variance_ratio_))

print(np.sum(nice[6].blocks_[1].\

covariants_pca_.even_pca_.pcas_[2].importances_))